F-22Raptor

ELITE MEMBER

- Joined

- Jun 19, 2014

- Messages

- 16,971

- Reaction score

- 3

- Country

- Location

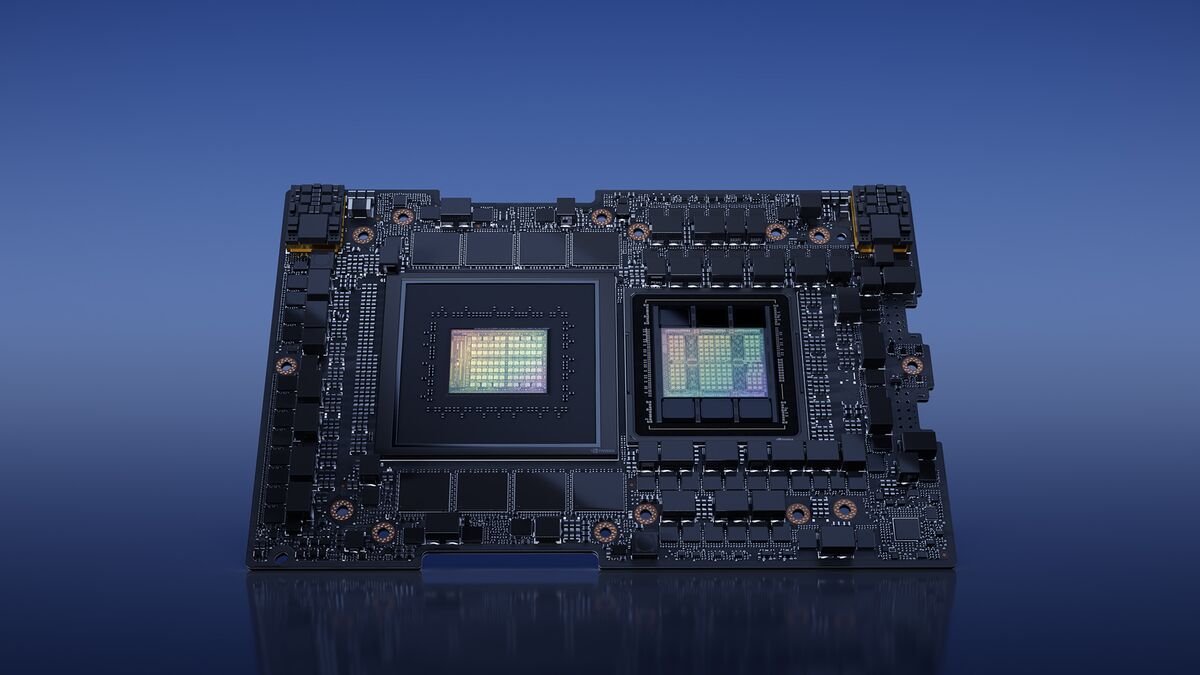

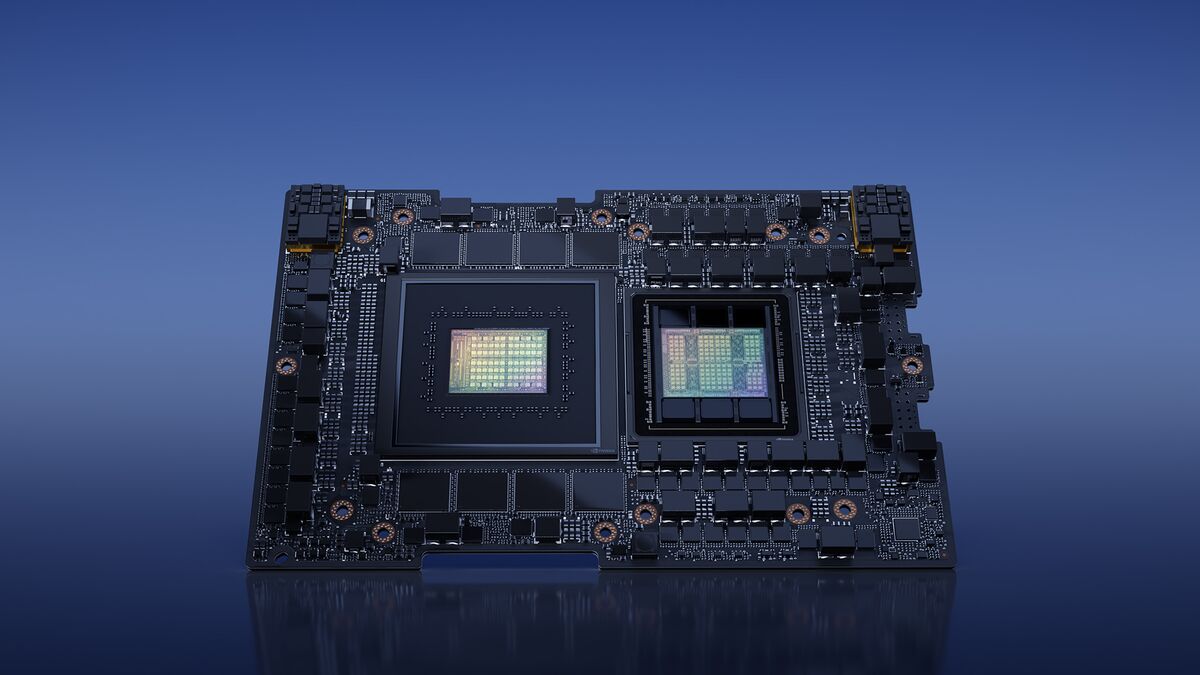

NVIDIA GH200 Grace Hopper SuperchipSource: Nvidia Corp.

By Ian King

August 8, 2023 at 3:32 PM UTC

Nvidia Corp. announced an updated AI processor that gives a jolt to the chip’s capacity and speed, seeking to cement the company’s dominance in a burgeoning market.

The Grace Hopper Superchip, a combination graphics chip and processor, will get a boost from a new type of memory, Nvidia said at the Siggraph conference in Los Angeles. The product relies on high-bandwidth memory 3, or HBM3e, which is able to access information at a blazing 5 terabytes per second.

The Superchip, known as GH200, will go into production in the second quarter of 2024, Nvidia said. It’s part of a new lineup of hardware and software that’s being announced at the event, a computer-graphics expo where Chief Executive Officer Jensen Huang is speaking.

Nvidia has built an early lead in the market for so-called AI accelerators, chips that excel at crunching data in the process of developing artificial intelligence software. That’s helped propel the company’s valuation past $1 trillion this year, making it the world’s most valuable chipmaker. The latest processor signals that Nvidia aims to make it hard for rivals like Advanced Micro Devices Inc. and Intel Corp. to catch up.

Huang has turned a 30-year-old graphics chips business into the top seller of equipment for training AI models, a process that involves sifting through massive amounts of data. Now that AI tools like ChatGPT and Google Bard are catching on with consumers and businesses, companies are racing to add Nvidia technology to handle the workload.

The Superchip will serve as the heart of a new server computer design that can handle a greater amount of information and access it more quickly — a key advantage given the tsunami of data flowing through AI models. Artificial intelligence training gets a boost if the chip can load a model in one go and update it without having to offload parts to slower forms of memory. That saves power and speeds the whole process up.

In servers, two of the chips can be deployed together, offering more than 3.5 times the capacity of an existing model, Nvidia said. That will let customers deploy fewer machines or get work done far quicker.

Nvidia Unveils Faster Processor Aimed at Cementing AI Dominance

Nvidia Corp. announced an updated AI processor that gives a jolt to the chip’s capacity and speed, seeking to cement the company’s dominance in a burgeoning market.