Hamartia Antidote

ELITE MEMBER

- Joined

- Nov 17, 2013

- Messages

- 35,188

- Reaction score

- 30

- Country

- Location

AWS Cramming up to 20,000 Nvidia GPUs in AI Supercomputing Clusters

The AI supercomputing options in the cloud have expanded at an unprecedented rate over the last few weeks. Amazon joined the party on Wednesday by announcing EC2 P5 virtual machine […]

The AI supercomputing options in the cloud have expanded at an unprecedented rate over the last few weeks.

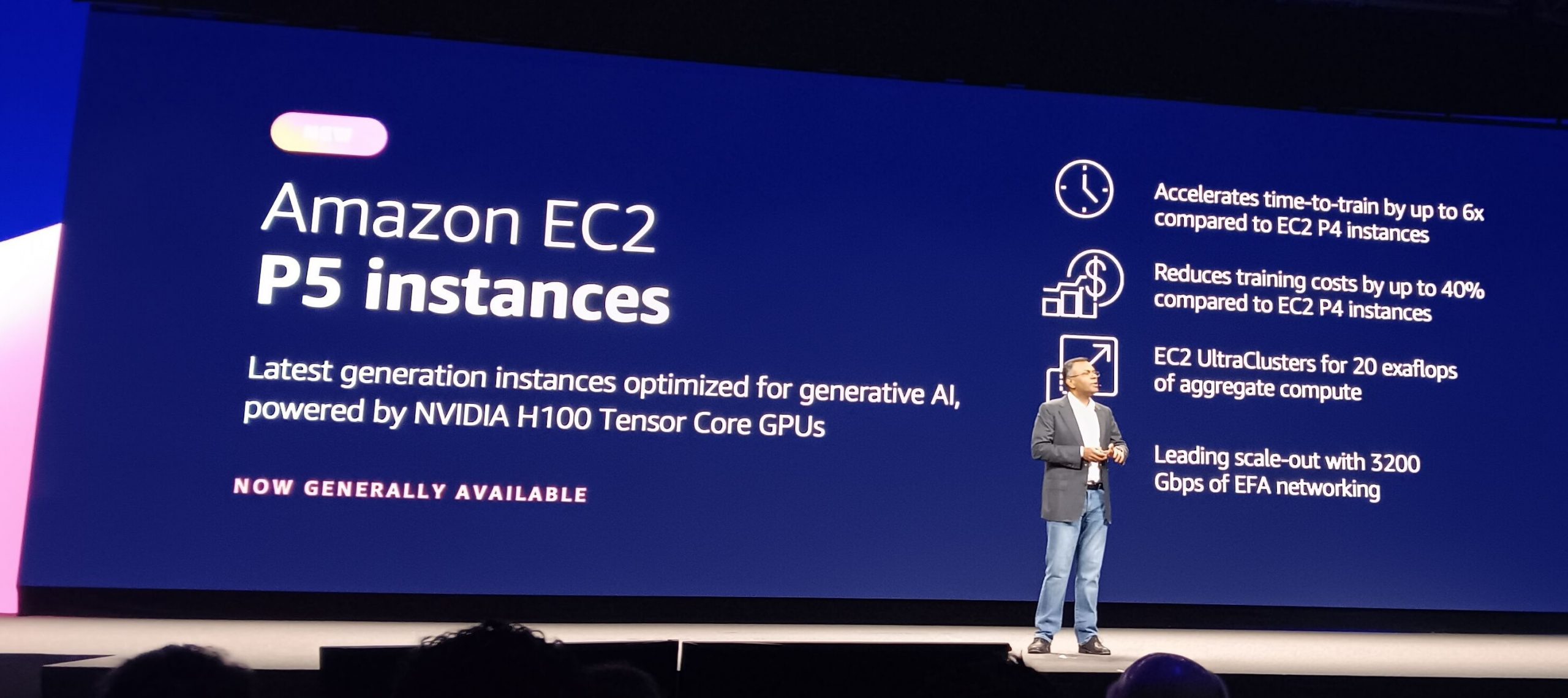

Amazon joined the party on Wednesday by announcing EC2 P5 virtual machine instances in AWS, which will run on Nvidia H100 GPUs.

The P5 instances can be conjoined into GPU clusters called UltraScale to provide an aggregate performance of up to 20 exaflops.

Customers will be able to scale up to 20,000 H100 GPUs in each UltraScale cluster. Users can deploy ML models scaling to billions or trillions of parameters.

The P5 instances are up to six times faster in training large-language models, and can cut training costs by 40% when compared to the EC2 P4 models, which are based on Nvidia’s previous generation A100 GPUs, said Swami Sivasubramanian, the vice president of database, analytics, and machine learning at AWS, during a keynote at the AWS Summit in New York.

“When you’re training these large language models, they are so large — hundreds of billions and trillions of parameters — that you can’t fit the whole model into memory at any one time and also be able to train. You have to be able to split that model up … across different chips in different instances,” said Matt Wood, AWS’s vice president of product, at the AWS Summit in New York.

Google earlier this year announced A3 supercomputers that can host up to 26,000 Nvidia H100 GPUs. Cerebras Systems last week announced it was deploying AI supercomputers in the cloud via Middle Eastern IT infrastructure company G42.

AWS has deployed its fastest interconnect to date for the P5 instances, with a 3200Gbps bandwidth between the instances, which allows extremely fast synchronization of the weights during the training process.

“In addition to the Nvidia chip, we’ve done a lot of work at AWS in the background on the network fabric which enables this remarkably fast bandwidth, which is part of the key to driving the computing as fast as it can get,” Wood said.

Companies like Bloomberg have created their own large-language models on AWS. Others are using AWS resources and existing LLMs to create custom machine-learning applications.

AWS is taking a divergent approach to tooling and middleware for customers to adopt machine learning.

Earlier this year, the company introduced Bedrock, which is more of a cloud-based playground where companies can try out and build large language models. Bedrock hosts many large-language models, including Meta’s Llama 2, which was announced earlier this month.

At the Summit, AWS also announced the availability of Anthropic’s Claude 2.0 chatbot model on Bedrock. Bedrock will also support Stable Diffusion XL 1.0, in which customers can input text to generate images.

Amazon’s approach to AI is different when compared to Google or Microsoft, which have their own proprietary models that are being integrated into search and productivity applications.

Amazon is instead looking to provide an exhaustive list of transformer models so customers can choose the best options.

Amazon also introduced a set of tools called Agents, which connects foundational models to outside data sources to provide more personalized answers to customers. The data could be stored on AWS or other hosts and could be connected via APIs.

For example, agents could help connect personal banking habits to LLMs to answer a customer’s financial questions.

“Allowing customers to very quickly get an agent spun up that is up to date with their latest data [is] important for real world applications,” Vasi Philomin, vice president of generative AI at AWS, told HPCwire.

Most of the conversation today is around the types of models, but that may not be the case in the future.

“I think the models won’t be the differentiator. I think what will be the differentiator is what you can do with them. Agents for me is a first step towards doing something right with those models,” Philomin said